Dowell Website Crawler API

Transform web data into actionable insights with Dowell’s advanced crawler API.

Dowell

Website Crawler API

A Website Crawler API is a tool that allows you to automate the process of extracting information from websites. Instead of manually visiting each webpage, you can use our API to fetch data programmatically, saving you time and effort.

Understanding API Calls

To effectively utilize our Website Crawler API, it’s essential to understand the various API calls and their functionalities. Below, we’ll break down the key components of making API requests:

Endpoints: These are the URLs that you’ll use to interact with our API. Each endpoint serves a specific function, such as retrieving website data or submitting crawling tasks.

Parameters: Parameters are additional pieces of information that you include in your API requests to customize the data retrieval process. These may include criteria such as the URL of the website to crawl or specific data to extract.

Request Methods: Our API supports different request methods, such as GET, POST, PUT, and DELETE. Each method serves a different purpose, such as retrieving data, submitting new tasks, updating existing tasks, or deleting tasks.

Response Formats: After making an API request, you’ll receive a response from our server. The response format may vary depending on the endpoint and the type of data requested. Common response formats include JSON, XML, or HTML.

By familiarizing yourself with these components, you’ll be equipped to make API calls confidently and efficiently.

Key Features

Website Crawling:

Automated traversal of all pages within the website.Systematically fetches HTML content from each webpage for further processing.

Email Extraction and Verification

Identifies and extracts email addresses found across the website's pages. Utilizes verification techniques to ensure the validity of extracted email addresses.

Social Media Link Identification

Scans the website to locate and extract URLs associated with social media profiles.Identifies various social media platforms linked to the website for comprehensive engagement analysis.

Logo Retrieval

Retrieves the website's logo image from its pages.Supports various image formats and resolutions to accommodate different design preferences.

Phone Number Gathering

Extracts all phone numbers listed on the website, including contact information and business lines. Supports detection of phone numbers in various formats for comprehensive data collection.

Data Enrichment and Structuring

Organizes extracted data into a structured format for easy integration with other systems. Enriches data with metadata and context, enhancing its utility for downstream applications.

How Does it Work?

- Input URLs: Provide the API with a list of URLs or a starting point from which to begin crawling.

- Page Retrieval: The crawler programmatically visits each provided URL, fetching the HTML content of the webpages.

- HTML Parsing: Extract relevant data from the HTML content, such as text, images, links, and metadata.

- Data Filtering: Apply filters to refine the extracted data, focusing on specific elements or information of interest.

- Structured Output: Organize the extracted data into a structured format, such as JSON or XML, for easy integration into applications or databases.

- Output Delivery: Receive the structured data via API response, enabling seamless integration into your software workflow for further analysis or processing.

Benefits of Using the Website Crawler API

- Efficiency: Say goodbye to manual web scraping. Our API automates the process, allowing you to extract data from multiple websites quickly and efficiently.

Accuracy: With our API, you can ensure consistent and reliable data extraction, reducing the risk of human error.

Customization: Tailor your web crawling experience to your specific needs with customizable parameters and endpoints.

Scalability: Whether you’re crawling one website or hundreds, our API scales effortlessly to meet your demands.

Cost-Effectiveness: No need for expensive software or dedicated resources. Our API offers cost-effective web crawling solutions for businesses of all sizes.

Postman Documentation

For detailed API documentation, including endpoint descriptions, request and response examples, and authentication details, please refer to the API documentation

Dowell Website Crawler API Demonstrative Scenarios

In the following scenarios, Dowell will furnish comprehensive instructions on obtaining the Service key and guide you through the steps to use the API. You’ll find examples in various formats such as Python, PHP, React, Flutter, and WordPress in the tabs below. Feel free to explore the examples in each tab for practical insights.

Dowell Website Crawler API Use Cases

Dive into the world of Website Crawler API! Discover how it revolutionizes content aggregation, SEO analysis, and data extraction. From tracking industry trends to optimizing website performance, this video unveils the power of automated data gathering. Don’t miss out – watch now for a glimpse into seamless data solutions!

Frequently Asked Questions (FAQs) about Dowell Website Crawler API

1. Can I crawl all pages of a website using the Dowell Website Crawler API?

Yes, you can crawl all pages of a website using our API. Just provide the website URL and set the appropriate parameters to start crawling.

2. What types of information can I extract from a website using this API?

You can extract various types of information from a website using our API, including email addresses, social media links, logos, phone numbers, and page URLs like about, contact, careers, services, and products.

3. How can I verify email addresses extracted from a website using this API?

Our API doesn’t provide email verification functionality. You’ll need to use a separate service Dowell Email API or implement your own verification process to ensure the validity of extracted email addresses.

4. Is there a limit to the depth of search when crawling a website?

Yes, you can set the maximum search depth parameter to limit how deeply the crawler explores pages within a website. Setting it to 0 means only the specified pages will be crawled without further exploration.

5. Can I extract only specific types of information from a website?

Yes, you can specify which types of information you want to extract from a website by setting the corresponding parameters in your API request.

6. How can I obtain support if I encounter any issues with the API?

If you encounter any issues, have questions, or need assistance with Dowell Website Crawler API, you can contact the support team for prompt assistance. Contact us at Dowell@dowellresearch.uk

7. Does the Dowell Website Crawler API support authentication?

es, our API supports authentication. You’ll need to include your service key in JSON data at the end you can check in postman documentation for authentication purposes.

8. Can I use the Dowell Website Crawler API to extract data from multiple websites simultaneously?

No, our API currently supports crawling and extracting data from one website at a time. If you need to extract data from multiple websites, you’ll need to make separate API requests for each website.

9. What happens if the website I want to crawl is password-protected?

Our API cannot crawl password-protected websites. You’ll need to ensure that the website is publicly accessible for our crawler to retrieve information from it.

10. What other APIs does Dowell UX Living Lab provide besides Dowell Website Crawler API?

Dowell UX Living Lab offers a wide range of APIs to enhance user experience and streamline various processes. To explore the full list of APIs available, including Dowell SMS, Dowell Newsletter, Samanta Content Evaluator, and many more.

For more details and to explore the complete list of APIs provided by Dowell UX Living Lab, please visit our documentation page.

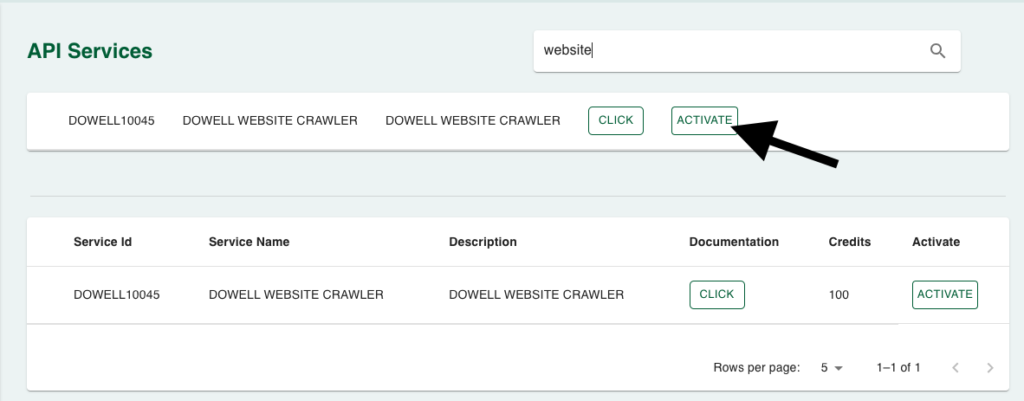

How To Get The API Key

- Access the Dowellstore website through this link: https://dowellstore.org and login. Once on the website, navigate to the API service section and activate the DOWELL WEBSITE CRAWLER API service. The API Key you need can be found in the dashboard, provided there as the service key. For more information follow the instructions in the videos found at the link below.

[How to get API key and redeem your voucher Step-by-Step Tutorial] - You can also find a step-by-step guide on how to get the API key and activate the API(s) by following this link Get The Service Key

- Then set the API KEY as part of the url parameter as shown below. Replace with your actual API key. The URL format: https://www.socialmediaautomation.uxlivinglab.online/api/v1/generate-sentences/

- Note: Make sure to activate your API from Dowell API Key System link provided above.

Python Example

1. WEB CRAWLER

Define the URL of the API endpoint: We specify the URL where the API is located. In this case, it’s the endpoint for the Website Crawler API provided by “uxlive.me”.

Prepare the request body: We construct a JSON object containing the necessary parameters for the API request. This includes the target website URL, maximum search depth, and information request details such as addresses, emails, links, logos, etc.

Make a POST request: Using the

requestslibrary in Python, we send a POST request to the API endpoint specified in Step 1. We include the prepared request body in JSON format as the payload of the request.Print the response: Finally, we print the JSON response received from the API. This will contain the extracted information from the specified website.

import requests

# Step 1: Define the URL of the API endpoint

url = "https://www.uxlive.me/api/v1/website-info-extractor/"

# Step 2: Prepare the request body in JSON format

payload = {

"web_url": "https://vcssbp.odishavikash.com/",

"max_search_depth": 0,

"info_request": {

"addresses": True,

"emails": True,

"links": True,

"logos": True,

"name": True,

"pages_url": [

"about",

"contact",

"careers",

"services",

"products"

],

"phone_numbers": True,

"social_media_links": {

"all": True,

"choices": [

"facebook",

"twitter",

"instagram",

"linkedin",

"youtube",

"pinterest",

"tumblr",

"snapchat"

]

},

"website_socials": {

"all": True,

"choices": [

"facebook",

"twitter",

"instagram",

"linkedin",

"youtube",

"pinterest",

"tumblr",

"snapchat"

]

}

},

"api_key": "<YOUR API KEY>"

}

# Step 3: Make a POST request to the API endpoint with the prepared request body

response = requests.post(url, json=payload)

# Print the response

print(response.json())

PHP Example

1. WEB CRAWLER

Set up the API Endpoint: Replace the

$urlvariable with the URL of the API endpoint you want to send the request to.Prepare Request Data: Customize the

$dataarray with the parameters you want to include in your API request. Make sure to replace<YOUR API KEY>with your actual API key.Make the POST Request: The code sends a POST request to the API endpoint with the specified data. The response from the API is captured and displayed. If there’s an error connecting to the API, an error message is shown instead.

<?php

// Step 1: Set up the URL of the API endpoint

$url = 'https://www.uxlive.me/api/v1/website-info-extractor/';

// Step 2: Prepare the data to be sent in the request body

$data = array(

"web_url" => "https://vcssbp.odishavikash.com/",

"max_search_depth" => 0,

"info_request" => array(

"addresses" => true,

"emails" => true,

"links" => true,

"logos" => true,

"name" => true,

"pages_url" => array(

"about",

"contact",

"careers",

"services",

"products"

),

"phone_numbers" => true,

"social_media_links" => array(

"all" => true,

"choices" => array(

"facebook",

"twitter",

"instagram",

"linkedin",

"youtube",

"pinterest",

"tumblr",

"snapchat"

)

),

"website_socials" => array(

"all" => true,

"choices" => array(

"facebook",

"twitter",

"instagram",

"linkedin",

"youtube",

"pinterest",

"tumblr",

"snapchat"

)

)

),

"api_key" => "<YOUR API KEY>"

);

// Step 3: Make a POST request to the API

$options = array(

'http' => array(

'header' => "Content-Type: application/json\r\n",

'method' => 'POST',

'content' => json_encode($data)

)

);

$context = stream_context_create($options);

$response = file_get_contents($url, false, $context);

if ($response === FALSE) {

// Handle error

echo "Error: Unable to connect to the API.";

} else {

// Output the response

echo $response;

}

?>

React Example

1. WEB CRAWLER

Install Necessary Packages: First, we need to install Axios, a popular HTTP client for making API requests in React applications.

Create a Component: Create a React component where we’ll define the function to make the API call.

Make the POST Request: Inside the component, use Axios to make the POST request to the specified API endpoint with the required parameters.

import React, { useEffect } from 'react';

import axios from 'axios'; // Step 1: Import Axios for making API requests

const WebsiteCrawler = () => {

useEffect(() => {

const fetchData = async () => {

try {

const response = await axios.post('https://www.uxlive.me/api/v1/website-info-extractor/', {

// Step 3: Make the POST request with required parameters

web_url: 'https://vcssbp.odishavikash.com/',

max_search_depth: 0,

info_request: {

addresses: true,

emails: true,

links: true,

logos: true,

name: true,

pages_url: [

'about',

'contact',

'careers',

'services',

'products'

],

phone_numbers: true,

social_media_links: {

all: true,

choices: [

'facebook',

'twitter',

'instagram',

'linkedin',

'youtube',

'pinterest',

'tumblr',

'snapchat'

]

},

website_socials: {

all: true,

choices: [

'facebook',

'twitter',

'instagram',

'linkedin',

'youtube',

'pinterest',

'tumblr',

'snapchat'

]

}

},

api_key: '<YOUR API KEY>'

});

console.log(response.data); // Output the response data

} catch (error) {

console.error('Error fetching data:', error);

}

};

fetchData();

}, []);

return (

<div>

<h1>Website Crawler API</h1>

<p>Fetching website information...</p>

</div>

);

};

export default WebsiteCrawler;

Flutter Example

1. WEB CRAWLER

- Define the API Endpoint URL: This is the URL where the POST request will be sent. Replace

<YOUR API KEY>with your actual API key. - Define the Request Body: This is the data that will be sent in the request. It includes the URL of the website to crawl, parameters for the extraction process, and your API key.

- Make a POST Request: Use the

http.postfunction to send the request to the API endpoint with the defined headers and request body. Await the response to get the result.

import 'package:http/http.dart' as http;

import 'dart:convert';

void main() async {

// Step 1: Define the API endpoint URL

final String apiUrl = 'https://www.uxlive.me/api/v1/website-info-extractor/';

// Step 2: Define the request body in JSON format

final Map<String, dynamic> requestBody = {

"web_url": "https://vcssbp.odishavikash.com/",

"max_search_depth": 0,

"info_request": {

"addresses": true,

"emails": true,

"links": true,

"logos": true,

"name": true,

"pages_url": [

"about",

"contact",

"careers",

"services",

"products"

],

"phone_numbers": true,

"social_media_links": {"all": true, "choices": [

"facebook",

"twitter",

"instagram",

"linkedin",

"youtube",

"pinterest",

"tumblr",

"snapchat"

]},

"website_socials": {"all": true, "choices": [

"facebook",

"twitter",

"instagram",

"linkedin",

"youtube",

"pinterest",

"tumblr",

"snapchat"

]}

},

"api_key": "<YOUR API KEY>"

};

// Step 3: Make a POST request to the API endpoint

final response = await http.post(

Uri.parse(apiUrl),

headers: <String, String>{

'Content-Type': 'application/json; charset=UTF-8',

},

body: jsonEncode(requestBody),

);

// Display the response from the API

print('Response status: ${response.statusCode}');

print('Response body: ${response.body}');

}

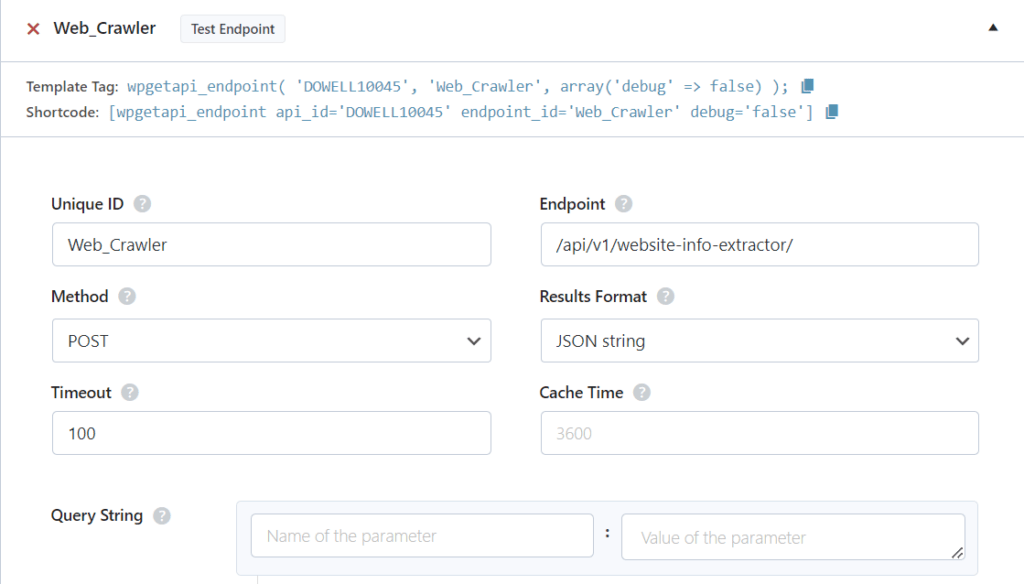

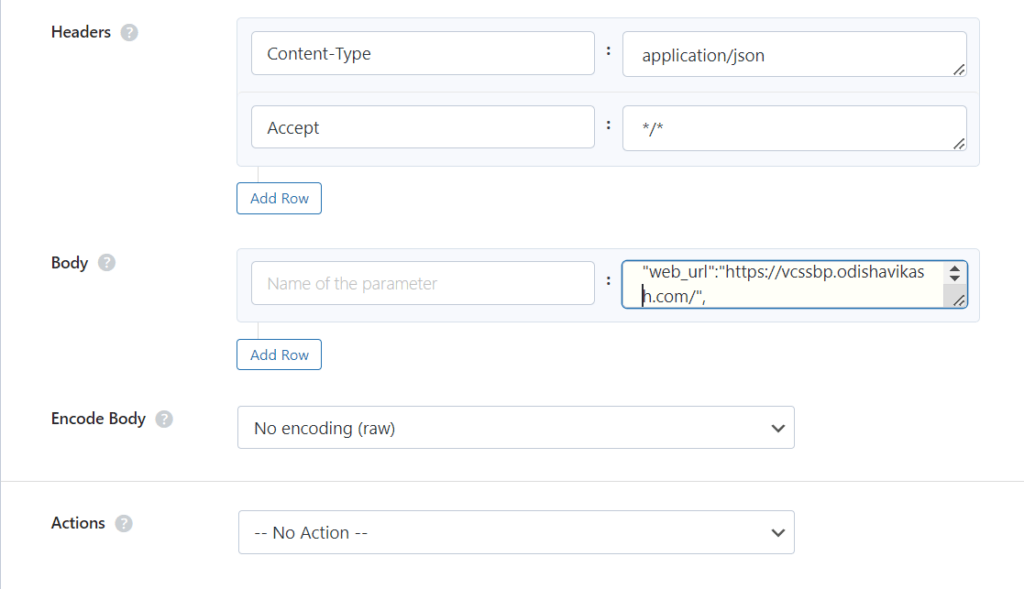

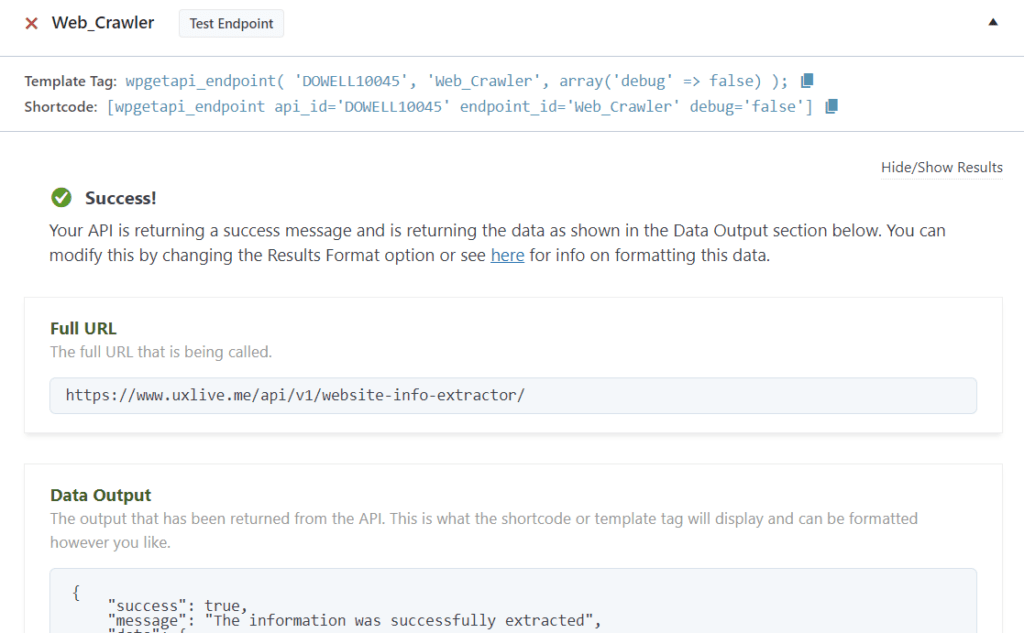

WordPress Example

1. WEB CRAWLER

Step 1: Set up the API name, unique ID and the base url(below). It’s a prerequisite to have WP-GET API plugin installed in your wordpress website

Step 2: Establish the API endpoint with the inclusion of the API key, and configure the request body to contain the required POST fields.

Step 3: Test the endpoint to obtain a jQuery response from the API. Ensure that the API is functioning correctly by sending a test request and examining the response.

Step 4: To display the output fetched from the Mail API service, copy the shortcode provided by the plugin and add it onto your wordpress website page.

D’Well Research validates and explores each stage of open innovation using user experience research from the field to support user-centered product design of medium and large companies globally.

DOWELL WEBSITES

QUICK LINKS

Our Visitors

Views Today :

Views Today :  Views Last 30 days : 217

Views Last 30 days : 217 Views This Year : 1019

Views This Year : 1019 Total views : 3943

Total views : 3943